Answers Customization

Orama allows you to define custom instructions (System Prompts) that shape how the AI engine responds to users. These prompts can be tailored for various use cases, such as altering the tone of the AI, introducing information that isn’t in the data sources, or directing users toward specific actions.

For example, if a user asks about pricing, a system prompt could instruct the AI to suggest visiting a website for details, instead of providing a direct answer. You could also include more context, like software versions or other specialized information to ensure more accurate responses.

Best Practices and Limitations

While System Prompts give you powerful control over the AI’s behavior, they are subject to certain validation rules to maintain safety and efficiency. We implemented jailbreak protection to guard against malicious or harmful actions. There are also limits on the length and complexity of prompts to ensure optimal performance.

These safeguards ensure that while you have the freedom to shape the AI’s responses, the assistant will always behave in a reliable, secure, and efficient manner.

Customizing AI Answers

This guide will help you understand how to customize the answers generated by the AI answer engine in your Orama index.

First of all, let’s create a new index. If this is your first time creating a new index, follow this guide to set up your first index.

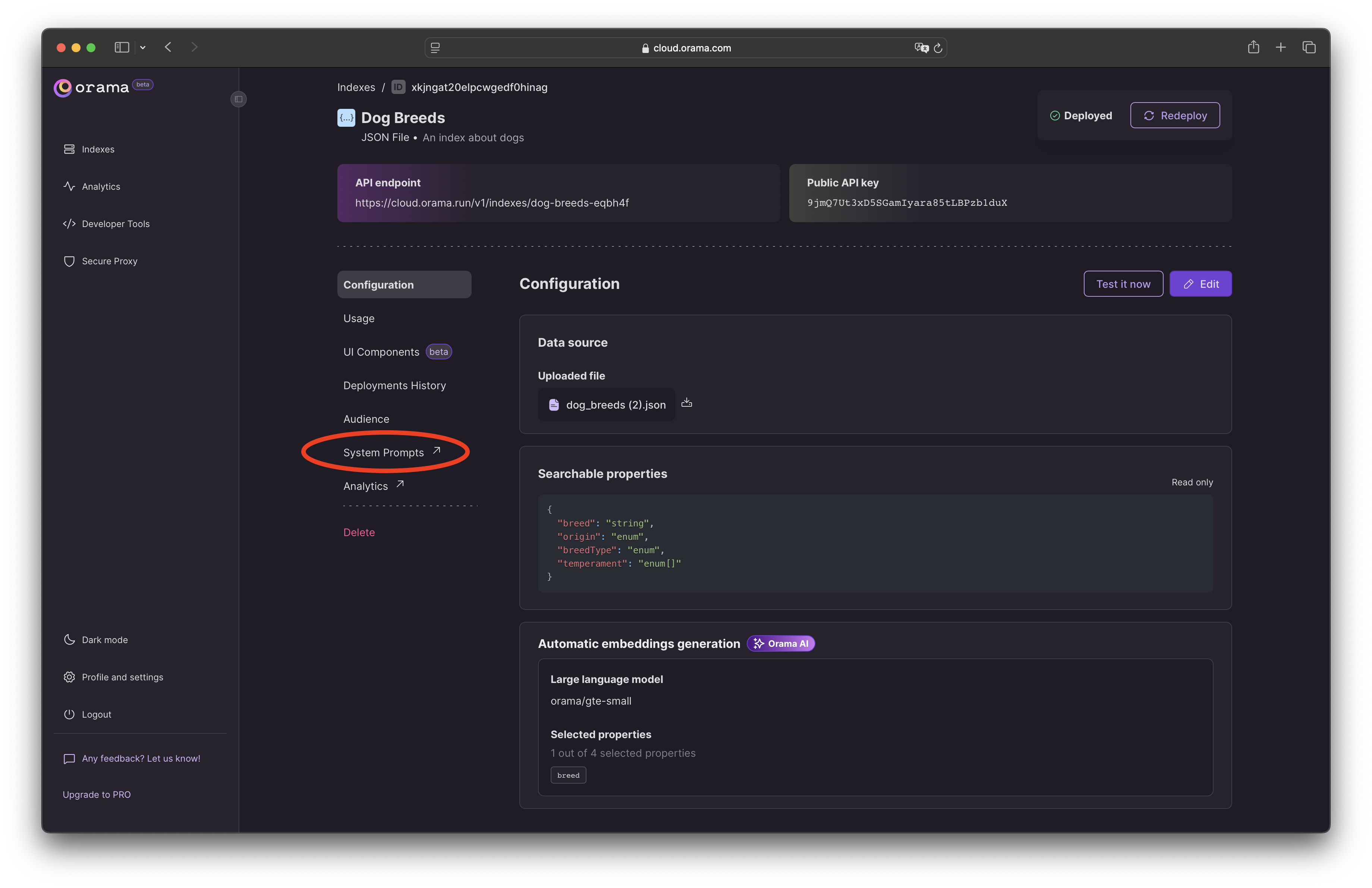

Once you have your index set up, you will find yourself in the index overview page. Click on the “System Prompts” tab on the left menu to access the system prompts settings.

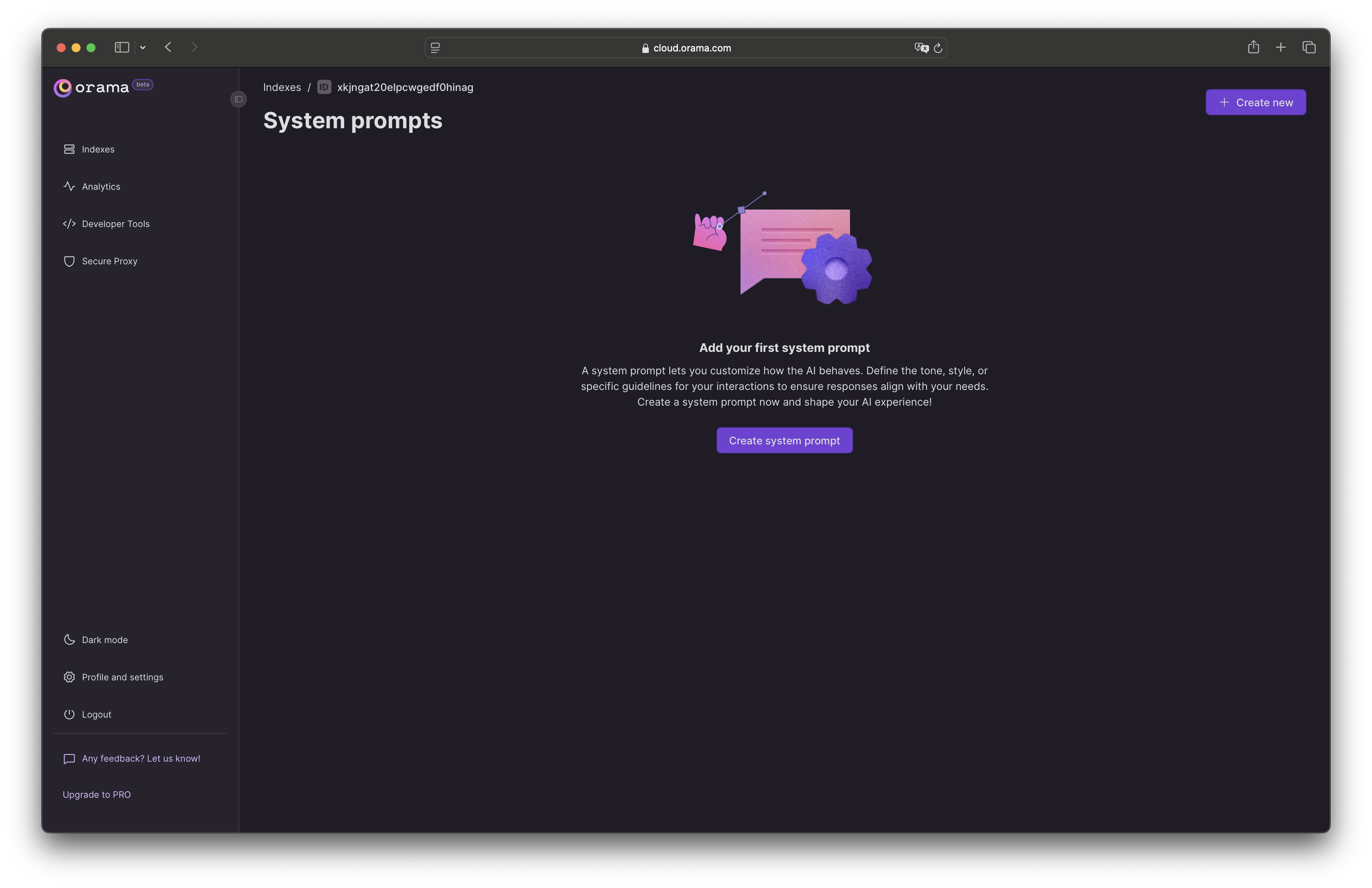

If you’re creating your first system prompt, click on “Create system prompt” to get started.

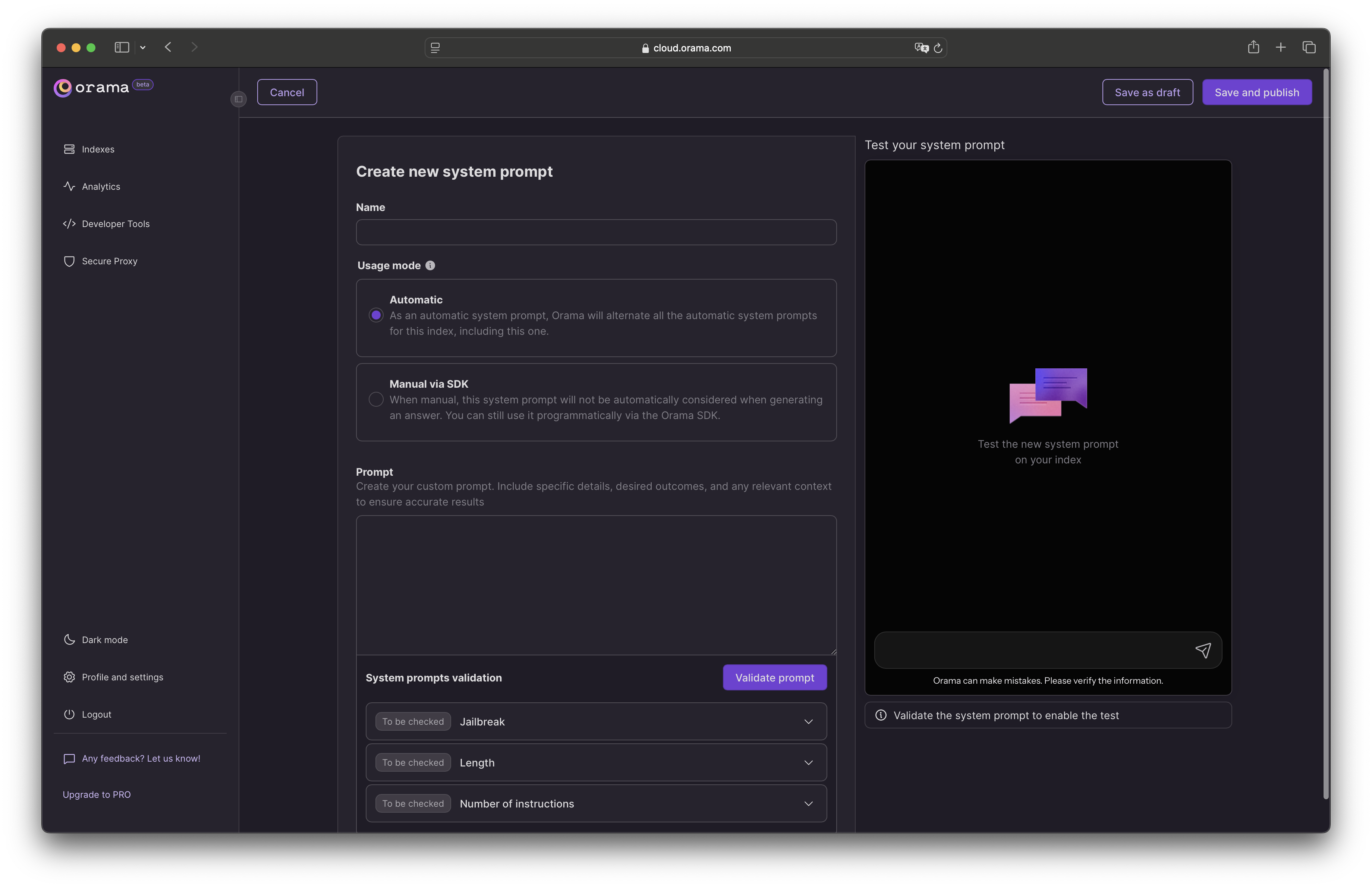

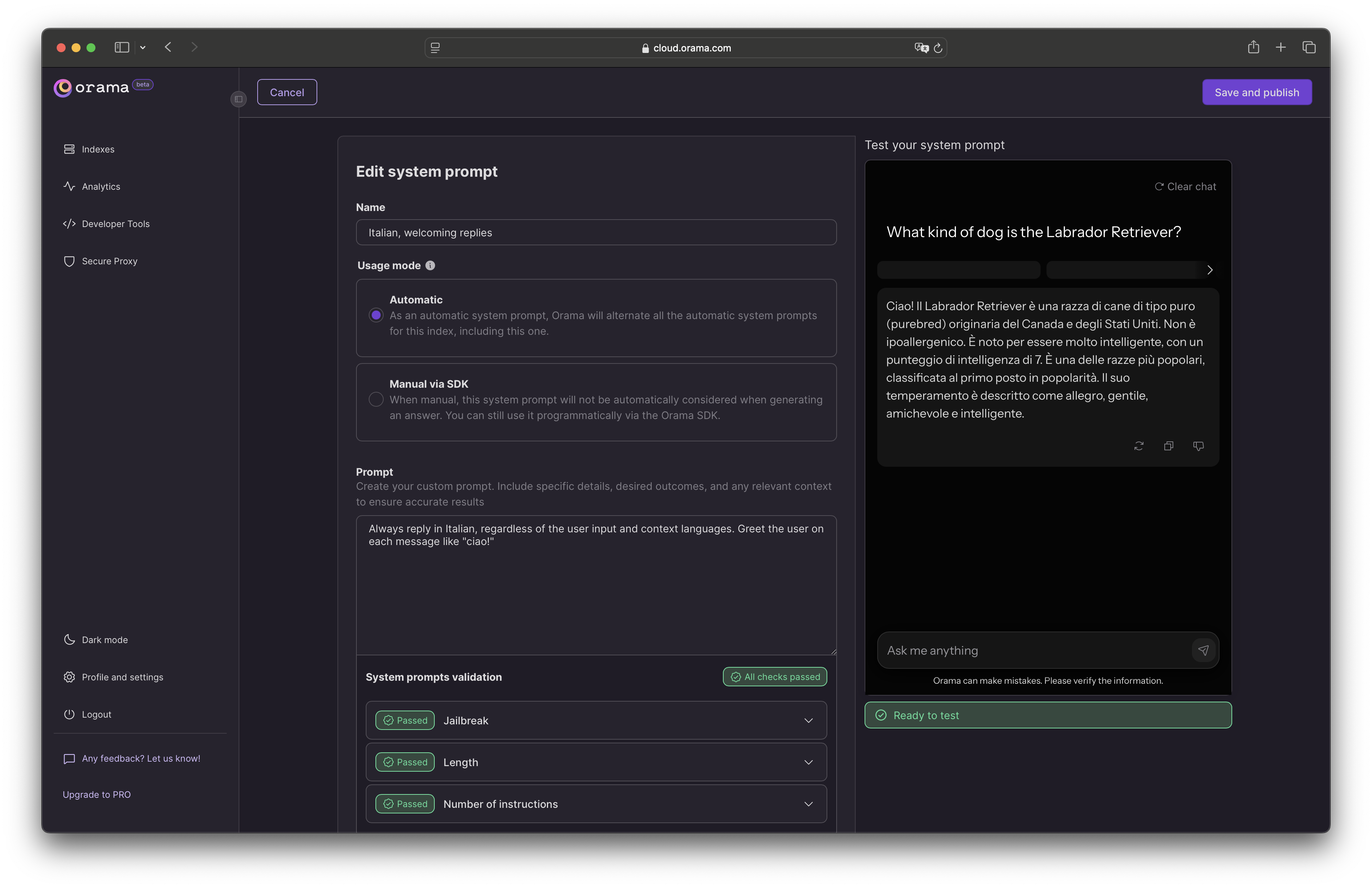

You can finally customize the system prompt by performing a number of operations, let’s break them down:

- Giving a name to the system prompt

Every system prompt should have a name. It should be unique and descriptive so that you can easily identify it later.

- Usage method

Select how the system prompt should be activated: Automatic or Manual. See Usage Methods for more details.

Usage Methods

Orama allows you to use the custom system prompts in a few different ways:

Automatic

By default, when giving an answer, Orama will automatically choose the system prompt to use. If you create multiple system prompts, Orama will randomly choose one of them.

This is useful when you want to A/B test which system prompt works best for your users.

Manual via SDK

When set to “manual via SDK”, the system prompt will not be used automatically. Instead, you will need to specify the system prompt ID when making a request to the Orama Answer Engine API via the SDK.

This can be useful when you know the user that’s logged in, and you want to give them a specific system prompt (e.g., to reply in a specific language or avoid giving certain information).

import { OramaClient } from '@oramacloud/client'

const client = new OramaClient({ endpoint: 'your-endpoint', api_key: 'your-api-key'})

const session = client .createAnswerSession({ events: { ... }, // Orama will randomly choose one of the system prompts. // Set just one prompt if you want to force Orama to use it. systemPrompts: [ 'sp_italian-prompt-chc4o0', 'sp_italian-prompt-with-greetings-2bx7d3' ] })

await session.ask({ term: 'what is Orama?'})You can also change the system prompt configuration at any time by updating the system prompt ID in the SDK:

import { OramaClient } from '@oramacloud/client'

const client = new OramaClient({ endpoint: 'your-endpoint', api_key: 'your-api-key'})

const session = client .createAnswerSession({ events: { ... }, systemPrompts: ['sp_italian-prompt-with-greetings-2bx7d3'] })

session.setSystemPromptConfiguration({ systemPrompts: ['sp_italian-prompt-with-greetings-2bx7d3'] // Overrides the previous configuration})

await session.ask({ term: 'what is Orama?'})Using the editor

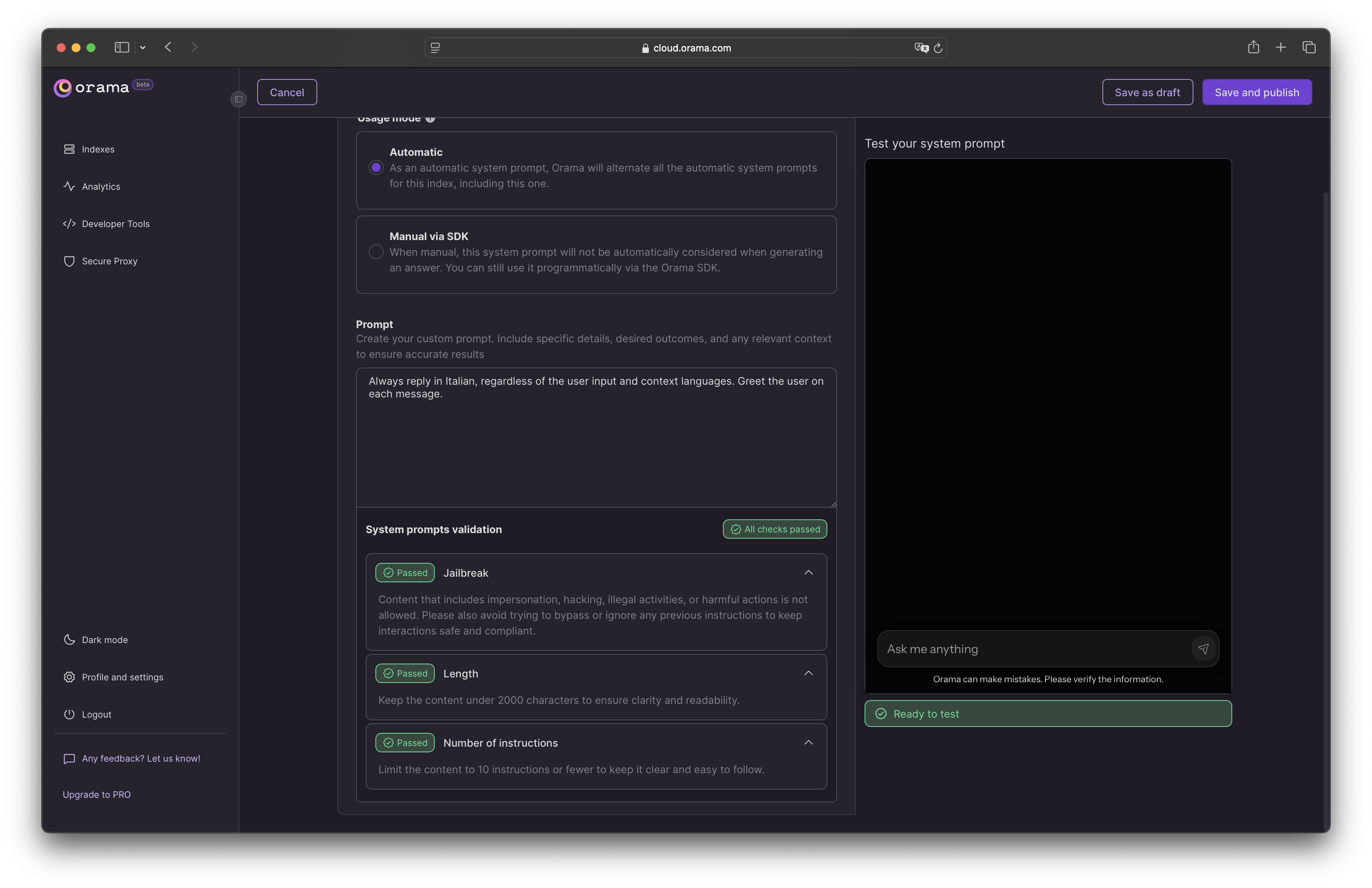

You can instruct Orama to behave in a specific way when giving answers to the user. For example, you could say to always reply in Italian, or to reply in a very specific format (like JSON), etc.

Before you’ll be able to test and use your system prompt, Orama will validate it against three security metrics:

- Jailbreak. Content that includes impersonation, hacking, illegal activities, or harmful actions is not allowed. Please also avoid trying to bypass or ignore any previous instructions to keep interactions safe and compliant.

- Length. Keep the content under 2000 characters to ensure clarity and readability. A long prompt can be confusing and difficult for LLMs to follow.

- Number of instructions. Limit the content to 10 instructions or fewer to keep it clear and easy for the LLMs to follow.

Once you’ve customized the system prompt, you can test it with the demo searchbox on the right side of the page:

What’s next

In the next release of Orama, we will introduce a scoring mechanism for your answer sessions. This will allow you to track the performance of your system prompts and understand which one works best for your users.